What is Bit Depth for Satellite Data (and Images)

DEFINITION:

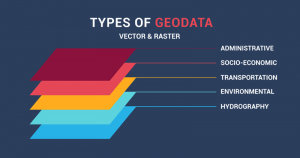

Bit depth in satellite images refers to the amount of information stored for each pixel. Higher bit depth helps detect subtle differences in land, water, or vegetation.

What is Bit Depth?

As described above in the definition, bit depth is the amount of detail in each pixel expressed in units of bits.

- A 1-bit raster contains two values (zero and one).

- But an 8-bit raster ranges from 0-255 (256 values in total).

A 1-bit raster gives two shades – simply black and white – or yes and no. An 8-bit raster would give 256 shades of grey.

A wide range of values gives the ability for pixel values to discriminate very slight differences in energy. Got it? Let’s study this a bit more:

8-bit vs 4-bit vs 2-bit Imagery

The 8-bit example below shows the coast of Tokyo in two different bit depths. Each band (red, green, and blue) has 256 colors and a pixel depth of 8. 28=256

And here is that same image in 4-bit with only 16 colors. 24=16

The 4-bit image has less variety in pixels than a classic Nintendo game. While the 8-bit image delivers more shades in each band with 256 in total.

You lose a lot of quality without the range of shading as seen in this 2-bit image:

Bit Depth Examples for Satellites

The exact range of digital numbers (DN) that a sensor uses depends on its radiometric resolution.

For example:

- Landsat Multispectral Sensor (MSS) measures radiation on a 0-63 DN scale.

- Landsat Thematic Mapper (TM) measures it on a 0-255 scale.

- Landsat-8 image is in 16-bit radiometric resolution (ranges from 0-65535).

As a trend, bit depth has increased over the years as the quality of sensors has improved.

Spectral, Spatial, and Radiometric Resolution

You won’t necessarily increase the quality of an image with higher radiometric resolution.

It will result in a greater range of values for each pixel. But it also depends on spatial and spectral resolution.

A sensor should have a balance between spectral, spatial, and radiometric resolution.

With finer spatial resolution, less energy from the ground is detected per pixel. Smaller pixels mean ground area decreases. There will be less upwelling energy back to the sensor. In this case, you’d have to broaden the wavelength range to increase the amount of energy detected.

Each sensor has a specific objective. For example, smaller spatial resolution (bigger pixels) compensates pixel size but obtains greater spectral and radiometric resolution.

Bit Depth and File Size

Higher radiometric resolution means trade-offs. As you increase the pixel depth, the file size also becomes larger.

- The 8-bit Sentinel image of Tokyo is 355 MB

- The 4-bit Sentinel image of Tokyo is 46 MB

- And the 2-bit Sentinel image of Tokyo is just 12 MB

If file storage is a concern, then consider the bit depth in an image. A lower range of values in an image means less memory consumed (but also less quality).

On a separate note, you can reduce the file size by choosing lossy and lossless compression methods.

Lossy compression (like JPEG) permanently eliminates certain information (especially redundant information) (even though the user may not notice it). However, lossless compression (like LZ77) retains values during compression, and file size is also reduced.

What’s Next?

Now that you have the basic knowledge of how radiometric resolution works…

See for yourself just how much detail is in a pixel…

Take a satellite image and convert it from 8-bit to 4-bit. In ArcGIS, select Data Management Tools > Raster > Raster Dataset > Copy Raster. Make sure to scale the raster, so pixel values will scale from larger to smaller bit depth.

You’ll see how the amount of detail in each pixel decreases, and this loss in information can be costly in remote sensing applications. On the other hand, it could save you gigabytes of file storage space.

I’m so interested in your webpage with your published documents. I need to join an online study about GIS technology

Thank you to the team here, I absolutely retain the concise explanations of complicated topics. Is there a forum or Q and A GIS /GEOINT site or page your team recommends?