GIS Data Engineering: Begin Your ETL Journey

Geospatial Data Engineering

Data engineering in GIS prepares spatial data for analysis. For example, this process fills in missing values, adds fields, geo-enriches, and cleanses values.

Typically, the whole data science workflow starts with data engineering and the necessary ETL workflow.

The data engineering aspect is possibly the most time-consuming aspect of data science. But it’s also one of the most crucial parts of the analysis because it’s only as good as the data we put into it.

In this article, we’ll explore the essential components of geospatial data engineering. We’ll also discuss how it can optimize spatial data for analysis.

Key Terminology in Data Engineering

Geospatial data is everywhere. It’s at the core of many data-driven, business-critical tasks. From mapping property boundaries to analyzing crop yields, geospatial analysis can make sense of their data.

Just like any type of data, you can undergo routine processes. This enables data scientists to provide insight for your business teams. Here are some of the key terminology that typically accompanies the data engineering process:

DATA WAREHOUSE: A collection of databases from various sources. It’s like a library of data where each person can own several data warehouses.

DATA LAKE: A repository for unstructured data. Think of it as a dumping ground for data.

DATABASE: Structured data in the form of tables, columns, and rows.

DATA PIPELINE: A series of tasks, each operating on a data set. A data pipeline delivers data from one system to another, typically to collect, store, and process data for analytical purposes.

EXTRACT, TRANSFORM, LOAD (ETL): The process of extracting data from one system, transforming it into a format that is consumable by another system. Finally, it involves loading it into the final system where it will be used for business analysis.

ETL – Extract, Transform, Load

ETL (Extract, Transform Load) is a series of processes that gets data ready for analysis and business insights. It moves data from one database to one or multiple databases as a pipeline project.

You can think of ETL as a relay race. Data comes into the system at one point, where it’s transformed. Then, it is passed from one runner to the next until it reaches its final destination.

| Process | Description |

|---|---|

| Extract | This process obtains data from a source system that typically isn’t optimized for analytics. |

| Transform | This step prepares data by filtering, aggregating, combining, and cleansing it to gain valuable insights. |

| Load | Loads and shares data into an internal or external application such as a data visualization platform like Tableau. |

Many companies choose ELT over ETL because they load the data before transforming it.

Data Engineering Tools

Data engineering is the process of collecting data from various sources and creating a data pipeline. The data pipeline moves data from its original source to a data warehouse. Spatial analysis is important for data-driven processes, but it can be challenging.

Despite the added complexity, data engineering has been gaining traction over the last several years. Here are some of the key data engineering software applications with native support for geospatial data.

Snowflake

Snowflake is a cloud-based data warehouse and data lake, which gathers data from various sources. It’s a software as a service (SAS) that enables scalable data storage and processing. Likewise, it offers flexible analytical solutions that are faster and easier to use. Its own SQL query engine is specifically designed for the cloud. Some of Snowflake’s supported geospatial data types include GeoJSON and PostGIS.

Apache AirFlow

This open-source Python-based ETL tool is designed for building and preparing data pipelines. Each process is a task represented with a Directed Acyclic Graph (DAG) that connects processes from one to another. In addition, Apache AirFlow has a unique set of tools that allow you to write, schedule, iterate, and monitor data pipelines.

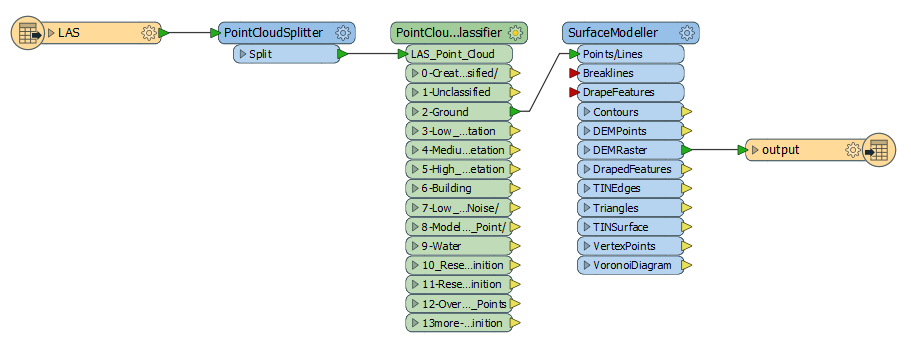

Feature Manipulation Engine (FME)

At its core, FME by SAFE Software is a specialist in spatial ETL. By leveraging FME Cloud, it’s a flexible solution that controls the flow of data. But it also allows you to work outside its cloud infrastructure such as with AWS. You can improve the ETL process by creating workbenches for readers, writers, and transformers. This will make geospatial formats all work well together.

READ MORE: FME Software – Feature Manipulation Engine (Review)

Alteryx

This is another example of a data engineering tool, in which you execute jobs as a DAG much like Apache Airflow. Alteryx specializes in performing ETL processing. This means you can extract and enrich data from other sources as well. Finally, you can move transformed data to Snowflake or any cloud-based platform.

ElasticSearch

Elasticsearch is useful for searching and analyzing all types of data. This includes textual information and other data types. This data engineering tool helps with GIS integration because it combines the Elastic Maps application for visualizing your geospatial data.

Databricks

The Databricks Geospatial Lakehouse is a data engineering platform for massive-scale spatial data science and collaboration. Databricks is one of the major players in data engineering. You can even connect to one through the CARTO Spatial Extension for Databricks. This can help you tap into even more potential for unlocking spatial analytics in the cloud.

Data Engineering in GIS

Spatial data engineering focuses on managing, processing, cleansing, and analyzing geospatial data. It is closely related to spatial data science. But data engineers have more focus on the implementation of the data engineering process. Whereas data scientists are more focused on the discovery and exploration of data.

Data engineering in GIS is the process of extracting and compiling data from multiple sources. Then, it transforms that spatial data into a format that’s useful for your business. Finally, it loads it into your data warehouse.

This hands-on, detail-oriented profession requires data engineers to be patient problem-solvers who enjoy meticulous work. But when you add geospatial into the equation, this increases the complexity of spatial analytics in the cloud.

Today, we just scratched the surface of the potential of data engineering in GIS. Is your focus on spatial data engineering? Please let us know your thoughts about it in the comment section below.

This particular course is a 1-month advanced training program on the Snowflake. Snowflake is the best cloud-based data warehousing & analytics tool. It is very unique in its approach and design hence most large-sized organizations started to use this tool.