What is Remote Sensing? The Definitive Guide

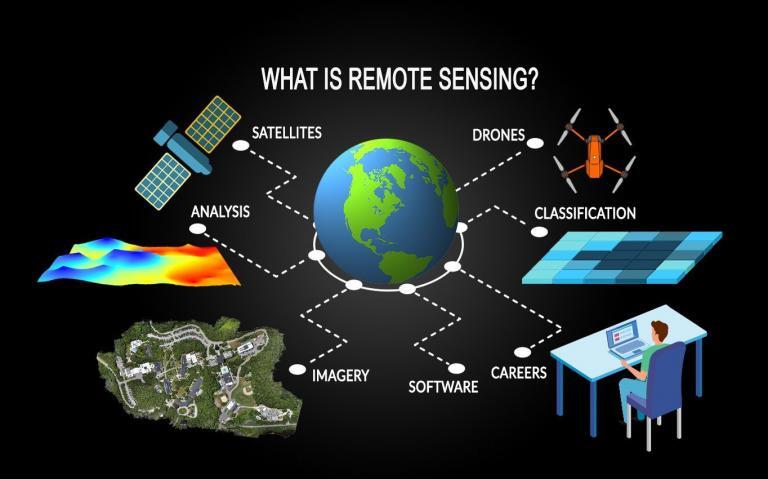

What is Remote Sensing?

Remote sensing is the science of obtaining the physical properties of an area without being there. It allows users to capture, visualize, and analyze objects and features on the Earth’s surface. By collecting imagery, we can classify it into land cover and other types of analyses.

Table of Contents

Chapter 1. Sensor Types

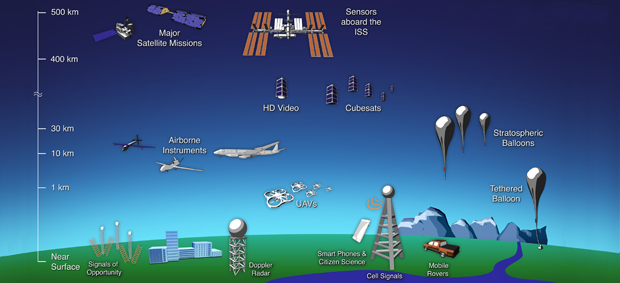

Remote sensing uses a sensor to capture an image. For example, airplanes, satellites, and UAVs have specialized platforms that carry sensors.

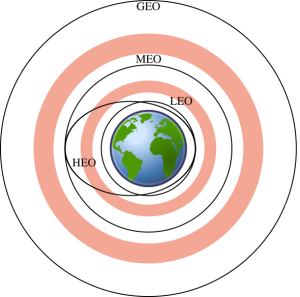

The diagram below shows the major remote sensing technologies and their typical altitudes.

TYPES OF SENSORS

Each type of sensor has its own advantages and disadvantages. When you want to capture imagery, you have to consider factors like flight restrictions, image resolution and coverage.

For example, satellites capture data on a global scale. But drones are a better fit for flying in small areas. Finally, airplanes and helicopters take the middle ground.

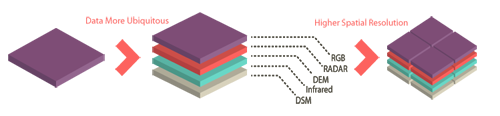

IMAGE RESOLUTION

For earth observation, you also have to consider image resolution. Remote sensing divides image resolution into three different types:

- Spatial resolution

- Spectral resolution

- Temporal resolution

SPATIAL RESOLUTION

Spatial resolution is the detail in pixels of an image. High spatial resolution means more detail and smaller pixel size. Whereas, lower spatial resolution means less detail and larger pixel size.

Typically, UAV imagery has one of the highest spatial resolution. Even though satellites are highest in the atmosphere, they are capable of 50cm pixel size or greater.

READ MORE: Maxar Satellite Imagery: Worldview, GeoEye and IKONOS

SPECTRAL RESOLUTION

Spectral Resolution is the amount of spectral detail in a band. High spectral resolution means its bands are more narrow. Whereas low spectral resolution has broader bands covering more of the spectrum.

TEMPORAL RESOLUTION

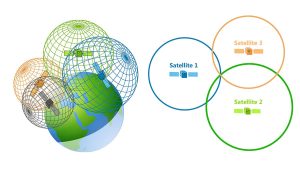

Temporal Resolution is the time it takes for a satellite to complete a full orbit. UAVs, airplanes, and helicopters are completely flexible. But satellites orbit the Earth in set paths.

Global position system satellites are in medium Earth orbit (MEO). Because they follow a continuous orbital path, revisit times are consistent. This means our GPS receiver can almost always achieve 3 satellites or greater for high accuracy.

READ MORE: Trilateration vs Triangulation – How GPS Receivers Work

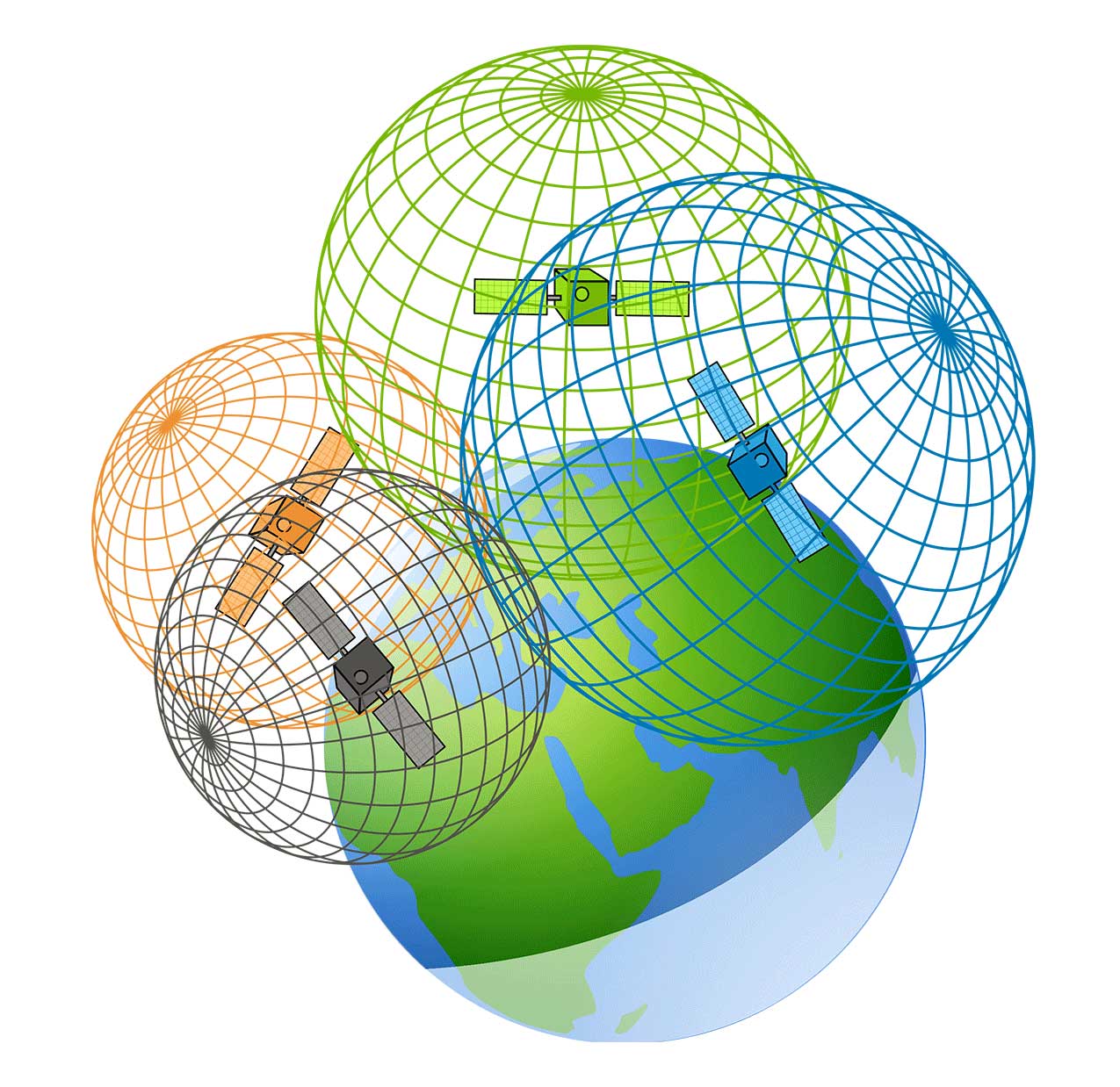

TYPES OF ORBITS

The three types of orbits are:

- Geostationary orbits match the Earth’s rate of rotation.

- Sun-synchronous orbits keep the angle of sunlight on the surface of the Earth as consistent as possible.

- Polar orbits pass above or nearly above both poles of Earth.

It’s the satellite’s height above the Earth’s surface that determines the time it takes for a complete orbit. If a satellite has a higher altitude, the orbital period increases.

We categorize orbits by their altitude:

- Low Earth Orbit (LEO)

- Medium Earth Orbit (MEO)

- High Earth Orbit (HEO)

We often find the weather, communications, and surveillance satellites in high Earth orbit. But CubeSats, the ISS, and other satellites are often in low Earth orbit.

Chapter 2. Types of Remote Sensing

The two types of remote sensing sensors are:

- Passive sensors

- Active sensors

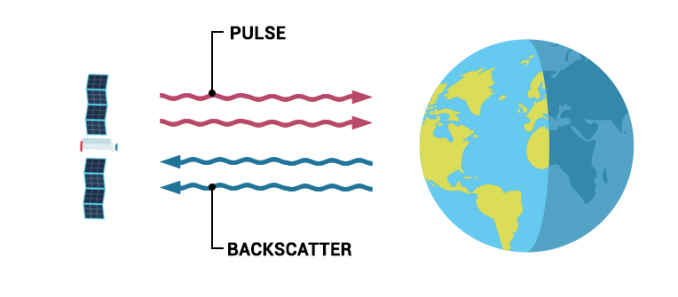

ACTIVE SENSORS

The main difference between active sensors is that this type of sensor illuminates its target. Then, active sensors measure the reflected light. For example, Radarsat-2 is an active sensor that uses synthetic aperture radar.

Imagine the flash of a camera. It brightens its target. Next, it captures the return light. This is the same principle of how active sensors work.

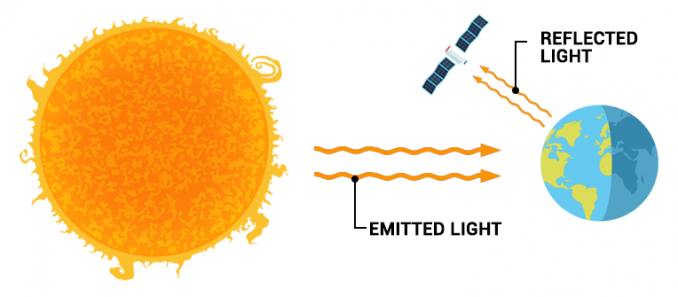

PASSIVE SENSORS

Passive sensors measure reflected light emitted from the sun. When sunlight reflects off the Earth’s surface, passive sensors capture that light.

For example, Landsat and Sentinel are passive sensors. They capture images by sensing reflected sunlight in the electromagnetic spectrum.

Passive remote sensing measures reflected energy emitted from the sun. Whereas active remote sensing illuminates its target and measures its backscatter.

Chapter 3. The Electromagnetic Spectrum

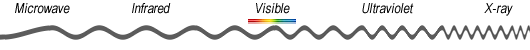

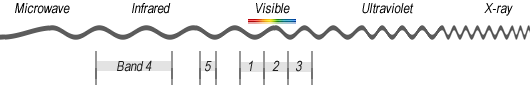

The electromagnetic spectrum ranges from short wavelengths (like X-rays) to long wavelengths (like radio waves).

Our eyes only see the visible range (red, green, and blue). But other types of sensors can see beyond human vision. Ultimately, this is why remote sensing is so powerful.

ELECTROMAGNETIC SPECTRUM

Our eyes are sensitive to the visible spectrum (390-700 nm). But engineers design sensors to capture beyond these wavelengths in the atmospheric window.

For example, near-infrared (NIR) is in the 700-1400 nm range. Vegetation reflects more green light because that’s how our eyes see it.

But it’s even more sensitive to near-infrared. That’s why we use indexes like NDVI to classify vegetation.

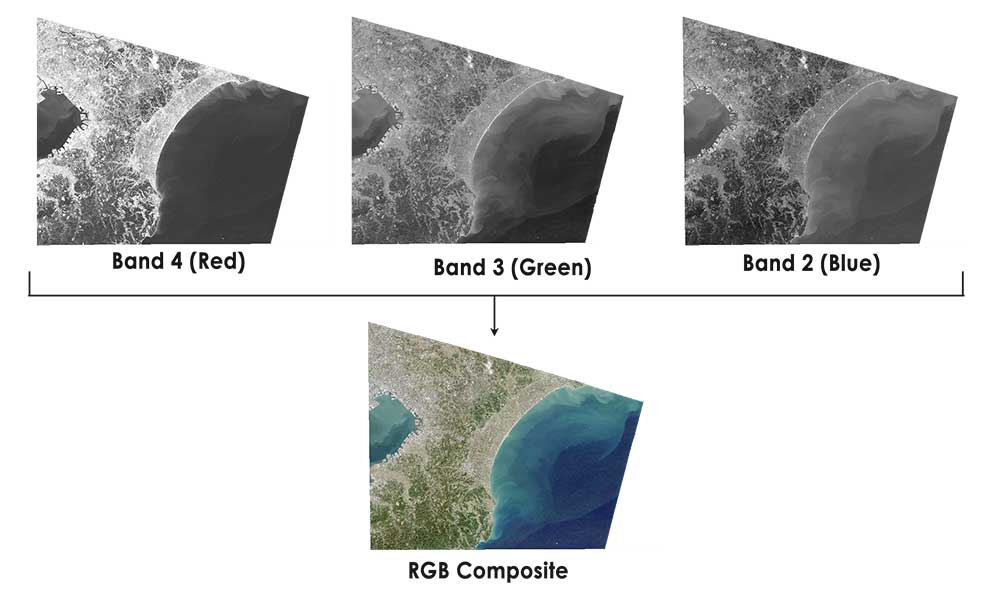

SPECTRAL BANDS

Spectral bands are groups of wavelengths. For example, ultraviolet, visible, near-infrared, thermal infrared, and microwave are spectral bands.

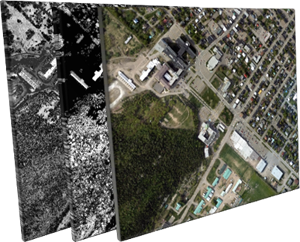

We categorize each spectral region based on its frequency (v) or wavelength. There are two types of imagery for passive sensors:

- Multispectral imagery

- Hyperspectral imagery

The main difference between multispectral and hyperspectral is the number of bands and how narrow the bands are. Hyperspectral images have hundreds of narrow bands, multispectral images consist of 3-10 wider bands.

MULTISPECTRAL

Multispectral imagery generally refers to 3 to 10 bands. For example, Landsat-8 produces 11 separate images for each scene.

- Coastal aerosol (0.43-0.45 um)

- Blue (0.45-0.51 um)

- Green (0.53-0.59 um)

- Red (0.64-0.67 um)

- Near-infrared NIR (0.85-0.88 um)

- Short-wave infrared SWIR 1 (1.57-1.65 um)

- Short-wave infrared SWIR 2 (2.11-2.29 um)

- Panchromatic (0.50-0.68 um)

- Cirrus (1.36-1.38 um)

- Thermal infrared TIRS 1 (10.60-11.19 um)

- Thermal infrared TIRS 2 (11.50-12.51 um)

HYPERSPECTRAL

Hyperspectral imagery has much narrower bands (10-20 nm). A hyperspectral image has hundreds of thousands of bands.

For example, Hyperion (part of the EO-1 satellite) produces 220 spectral bands (0.4-2.5 um).

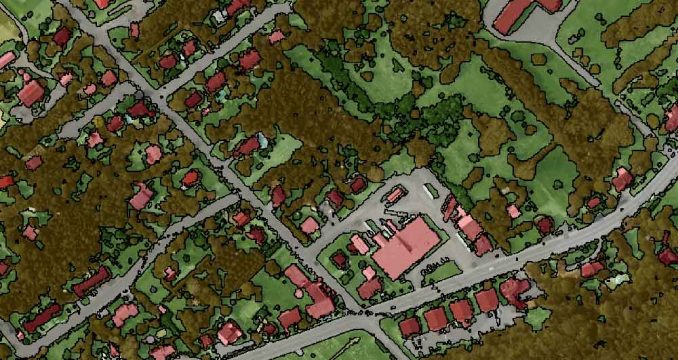

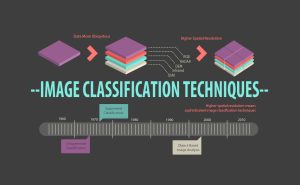

Chapter 4. Image Classification

When you examine a photo and you try to pull out features and characteristics from it, this is the act of using image interpretation. We use image interpretation in forestry, military, and urban environments.

We can interpret features because all objects have their own unique chemical composition. In remote sensing, we distinguish these differences by obtaining their spectral signature.

SPECTRAL SIGNATURES

In the mining industry, there are over 4000 natural minerals on Earth. Each mineral has its own chemical composition that makes it different from others.

It’s the object’s chemical composition that drives its spectral signature. You can classify each mineral because it has its own unique spectral signature. When you have more spectral bands, this gives greater potential in image classification.

A spectral signature is the amount of energy reflected in a particular wavelength. Differences in spectral signatures are how we tell objects apart.

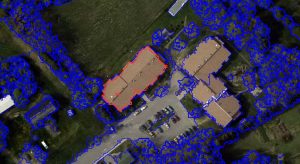

IMAGE CLASSIFICATION

When you assign classes to features on the ground, this is the process of image classification.

The three main methods to classify images are:

- Supervised classification

- Unsupervised classification

- Object-based image analysis

The goal of image classification is to produce land use/land cover. By using remote sensing software, this is how we classify water, wetlands, trees, and urban areas in land cover.

Chapter 5. Applications and Uses

There are hundreds of applications of remote sensing. From weather forecasting to GPS, it’s satellites in space that monitor, protect, and guide us in our daily lives.

LOCAL ISSUES

Commonly, we use UAVs, helicopters, and airplanes for local issues. But satellites can also be useful for local study areas as well.

Here are some of the common sensor technologies:

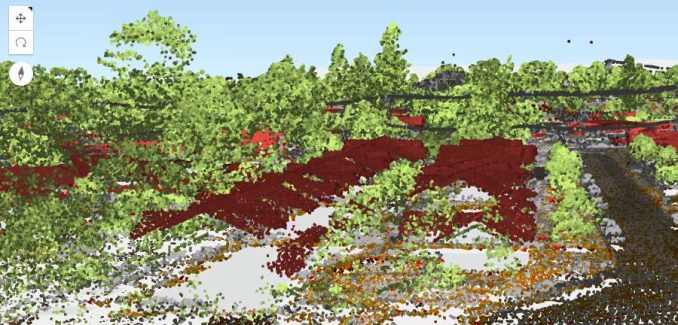

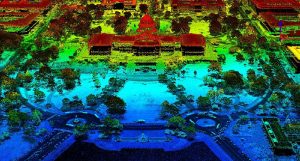

- Light Detection and Ranging (LiDAR)

- Sound navigation ranging (Sonar)

- Radiometers and spectrometers

We use Light Detection and Ranging (LiDAR) and Sonar. Both are ideal for building topographic models. But the main difference between the two is “where”. While LiDAR is best suited for the ground, Sonar works better underwater.

By using these technologies, we build digital elevation models. Using these topographic models, we can predict flooding risk, archaeological sites, and delineating watersheds (to name a few).

GLOBAL ISSUES

As the world becomes more globalized, we are just starting to see the proliferation of remote sensing. For example, satellites tackle issues including:

- Navigating with global positioning systems

- Climate change monitoring

- Arctic Surveillance

Satellite information is fundamentally important if we are going to solve some of the major challenges of our time. All things considered, it’s an expanding field reaching new heights.

For issues like climate change, natural resources, disaster management, and the environment, remote sensing provides a wealth of information on a global scale.

All the material on remote sensing and GIS is very informative and easy to understand but one thing should be mention is that image resolution should be more elaborated with picture then it will be more understandable and comprehensive.

it is good

l am in a GIS course. May you kindly send me information on remote sensing?

Very educational material

how about hydrocarbon exploration, to my knowledge Remote Multi-Spectral Prospecting is being applied in the hydrocarbon exploration, can you elaborate on this

I have learned a lot. Thank you. But please I need more explanation about the visible spectrum.

Which sensor is ideal for eutrophication detection and eutrophic level analysis in water (nitrates and phosphates)

The material is clear and easy to understand. thanks

The materials that I found are very important and well categorized, it is easy to understand due to their figure explanations, it is ready to learn and teach. It can be considered as a good and complete course on remote sensing. Thank you

Excellent content, very easy to understand. Thanks a lot for publishing.

Good and has simple English that is able to grasp easily. Can I get the clear difference between supervised and unsupervised classification??

Here’s an article on supervised vs unsupervised classification – https://gisgeography.com/supervised-unsupervised-classification-arcgis/

Its helpful. My assignment has been an easy ride with this information

Just what I needed for my work

It is very useful